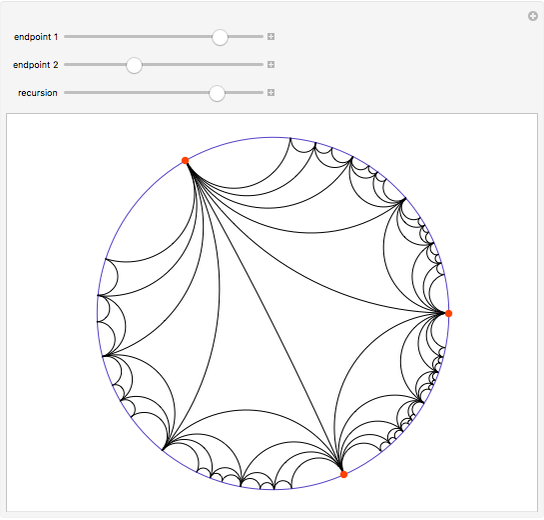

It turns out that hyperbolic space can better embed graphs (particularly hierarchical graphs like trees) than is possible in Euclidean space.

The big goal when embedding a space into another is to preserve distances and more complex relationships. One example is embedding taxonomies (such as Wikipedia categories, lexical databases like WordNet, and phylogenetic relations). The motivation is to combine structural information with continuous representations suitable for machine learning methods. Hyperbolic embeddings have captured the attention of the machine learning community through two exciting recent proposals. tradeoffs and theoretical properties for these strategies these give us a new simple and scalable PyTorch-based implementation that we hope people can extend!.

Poincare disk graph theory how to#

Fundamentally, the problem is that these objects are discrete and structured, while much of machine learning works on continuous and unstructured data. Embedding these structured, discrete objects in a way that can be used with modern machine learning methods, including deep learning, is challenging. Valuable knowledge is encoded in structured data such as carefully curated databases, graphs of disease interactions, and even low-level information like hierarchies of synonyms.

Poincare disk graph theory code#

To learn more, see the privacy policy.Hyperbolic Embeddings with a Hopefully Right Amount of Hyperbole by Chris De Sa, Albert Gu, Chris Ré, and Fred Sala Ĭheck out our paper on arXiv, and our code on GitHub! Please note that Related Words uses third party scripts (such as Google Analytics and advertisements) which use cookies. Special thanks to the contributors of the open-source code that was used to bring you this list of term themed words: Concept Net, WordNet, and is still lots of work to be done to get this to give consistently good results, but I think it's at the stage where it could be useful to people, which is why I released it. You will probably get some weird results every now and then - that's just the nature of the engine in its current state. related words - rather than just direct synonyms.Īs well as finding words related to other words, you can enter phrases and it should give you related words and phrases, so long as the phrase/sentence you entered isn't too long.

These algorithms, and several more, are what allows Related Words to give you. Another algorithm crawls through Concept Net to find words which have some meaningful relationship with your query. The vectors of the words in your query are compared to a huge database of of pre-computed vectors to find similar words. One such algorithm uses word embedding to convert words into many dimensional vectors which represent their meanings. Related Words runs on several different algorithms which compete to get their results higher in the list.

0 kommentar(er)

0 kommentar(er)